You’ve probably heard a lot about AI Agents. It may seem like it’s the latest buzzword on the AI hype train, and that’s totally understandable. Companies do use buzzwords to promote their products, and the field of AI is littered with buzzwords. This article discusses AI Agents in the context of software development. While “AI Agent” is a buzzword, we’re convinced that it is going to change the way we make software forever.

What is an AI Agent?

An AI Agent is a program that can make and execute plans, use tools, and iterate to achieve a goal. Raw Large Language Models (LLMs) only respond to prompts, but an agent goes one step further by actively deciding which actions to take next (or which tools to use) to solve problems. This is how an agent achieves autonomy.

How Agents Perform Tasks

A task is a single piece of work that an agent performs. It should have a clear aim, and there should be some clear criteria for the AI to evaluate when it has finished.

Setting Goals you give the agent an objective

Gathering Context the agent examines the task and its context, which could include user instructions, data from files, or environment data.

Planning the agent writes the steps to complete the goal. This involves selecting which tools, such as external APIs, terminal commands, database queries, etc., to use.

Execution the agent executes the plan step-by-step by retrieving information, calling APIs, writing code, and reading documents.

Self-Correction the agent evaluates the outcome, and can instruct itself to make a correction on the next iteration. If a step fails, the agent can try again.

The Role of Tools

Tools are external resources or functionalities that an agent can use to accomplish a task. These could range from accessing a database to running a command at the terminal to calling a web API or running Python scripts to parse data. AI Agents can offload specialized tasks to well-tested, efficient solutions, which gives the agent the ability to perform tasks on behalf of the user.

Self-Correction Through Tools

Agents can self-correct with tools to verify or refine their work. For example, if the agent is building software, it can:

- Use a compiler or linter to detect syntax errors.

- Write unit tests to check for logic flaws.

- Perform API calls to gather real-time data to validate assumptions.

When an error surfaces, the agent can adjust its plan. This iterative feedback loop enables better overall accuracy than a raw LLM that only makes one-shot predictions.

Context Window and RAG

What is a Context Window?

All large language models (LLMs) work with a context window, which defines how much text or data the model can see at once. We use the term “token limit” as the metric to measure this. Understanding context windows is critical because LLMs with larger models require more computing power and cost more to run.

For example, at the time of writing this article, o1-mini has a context window of 65,536 tokens, while o1 has 100,000 tokens. According to ChatGPT, in English, “100k tokens typically represents ~75,000–80,000 words of text”.

Once you surpass that limit, the model “forgets” older parts of the conversation unless they’re summarized or reintroduced. Overloading the context window would usually result in garbled outputs.

RAG (Retrieval Augmented Generation)

Retrieval Augmented Generation (RAG) is a technique where the model uses an external retrieval system to gather relevant information based on the user’s query or context. Rather than relying on a single prompt, the agent retrieves the most relevant data from a large dataset to create the context. It usually does this with a vector search, which allows for semantic searching rather than traditional text searches. This effectively expands the working memory beyond the capacity of the LLM context window because the agent can swap new context as needed to perform the various tasks.

How an Agent Uses RAG

- The agent receives a question or task.

- It searches a knowledge base (through vector searches or indexing) to find relevant documents, codes or facts.

- It extracts only the relevant text so as to avoid adding useless text to save on context

- The agent adds this retrieved content to the LLM prompt, which effectively gives the model more context.

- The model then generates a response using both the original prompt and the retrieved information.

This allows smaller or more efficient models to maintain performance on complex tasks without needing an astronomically large built-in context window, which costs more money, power and time.

AI Software Development Agents in Action

Nimblesite has developed a local AI agent for development purposes. We are refining this and will use it to assist us when building your software if you are happy for us to do so. We are looking to move this to the cloud as a completely autonomous and configurable cloud-based SaaS product. Reach out to us if you need a custom AI agent for your team.

Currently, the most straightforward way to see an AI Software Agent in action is to install an IDE that has an agent inbuilt. There are many available at this time, but Cursor is the most popular. It lets you use the AI agent directly inside the coding workspace. Here are some things it can do.

- Analyze your codebase, answer questions about it and make improvements.

- Search and read through your documentation to produce code that matches the documentation. Or, it can produce documentation based on your existing code.

- Run compilers, access runtimes, or testing frameworks to validate the code on the fly.

Note that Cursor’s agent, by default, is not fully autonomous. It runs on your computer and generally asks you if you want to accept its work on each step. While it is a huge advancement in software development, this tool is designed to help you do software development. It isn’t designed to work autonomously like another employee.

AI Agents Not Hype

It’s true that “AI Agent” is the latest buzzword. Everyone is talking about the topic, but it’s not unwarranted hype. We’ve been using AI agents recently, and we’ve seen first-hand how powerful they can be for software development. We generally use Claude Sonnet 3.5 because it’s fast and accurate with tools.

Here are some of the things we’ve used AI agents for:

- Generating automated tests

- Installing missing dependencies on our development computers

- Finding bugs

- Explaining code

- Cleaning up code

- Implementing whole features

- Diagnosing issues

- Adding missing configuration

- Generate documentation

We already use AI Agents to pay back tech debt. For example, writing tests is a laborious task and usually doesn’t get done. We’ve found that even when we are tired and ready to finish up for the day, we can still get the agent produce meaningful tests that lift the quality of the codebase.

In many cases, we asked the agent to implement some functionality. It found the relevant parts of the code, inserted the new code, and it worked the first time. It’s obvious that this is a game changer right now, but it’s also obvious that when developers have access to this kind of power running in the cloud with the ability to run tasks in parallel, this will massively improve our ability to deliver high-quality software.

Efficient Models as AI Agents

Power Consumption

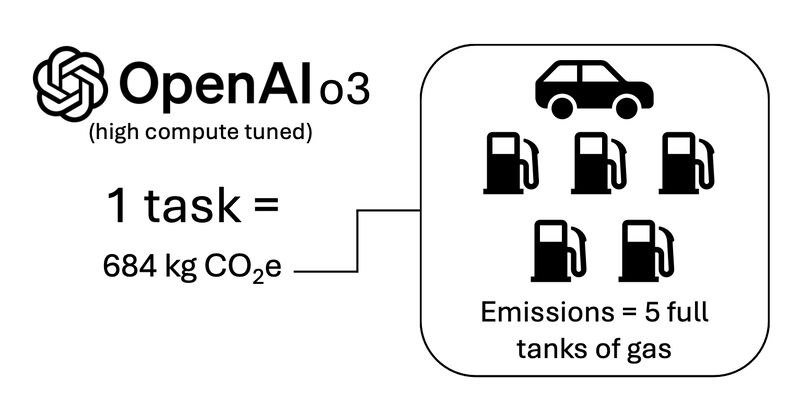

Some estimate that OpenAI’s o3 model could use as much power as five tanks of petrol for a single task.

While this is only an estimate based on cost per call, it suggests that more powerful models are extremely energy hungry. If we build our AI infrastructure around these, AI will contribute significantly to carbon emissions.

This is a gigantic increase from what the OECD reported about GPT-3, which was already quite intensive.

Our latest scenarios for GPT-3 indicate an energy consumption of 8–51 Wh per request.

It’s clear that we need to do what we can to improve the efficiency of our use of AI.

Balancing Cost, Power Consumption, Accuracy, Context Window, and Speed

We cannot base sustainable AI usage on ever-larger models. These massive models, while powerful, are also expensive to train, power-hungry, and usually slow to run.

Achieving high accuracy in an ML model generally requires more computational resources due to the increased complexity in calculations, data processing, and additional training itera- tions. ~ Markidis, S., Raff, D., et al.

However, efficient LLMs as AI agents provide a different path forward.

Enhanced Problem-Solving Through Tool Use

Smaller models can dynamically interact with external tools and APIs to solve tasks that would otherwise require the brute-force capabilities of a massive model. The targeted use of tools offloads complex computations and allows smaller models to perform as effectively as larger ones, but without the unnecessary costs.

Self-Correction with Iterative Reasoning

Agents can break down problems into manageable steps and revisit the outputs for later refinement. This allows smaller AI agents to achieve high levels of accuracy and reduces the need for overpowered models by ensuring smaller models adapt incrementally and only use the required context.

RAG & External Knowledge Integration

Retrieval-Augmented Generation (RAG) enables smaller models to access large, external knowledge bases as needed. This bypasses the limitations of a small context window and allows the agents to handle complex, knowledge-intensive tasks without the extensive memory and power consumption of a larger model.

The Future of AI: Efficiency Over Arms Race

The future of AI is not about ever-increasing power consumption and context window size. It is about efficient models that balance cost and power consumption with accuracy and speed.

As AI companies create hype and FOMO around bigger and bigger models, they often neglect that AI agents can achieve a lot more with far less. Models like o1 are powerful but come with a hefty price tag in terms of speed, power, and cost.

Even with all this power, they are not infallible on the first try. All good software developers understand the importance of feedback and iteration.

Local and Green

Apple has demonstrated that even smartphones can run basic AI models locally. Apple intelligence already does text completion, on-device speech recognition, image classification, or private text-to-speech on iPhone. As hardware continues to improve, running advanced local AI models will become increasingly feasible. With agent functionality, these local models can be just as powerful as the more compute-intensive models with the use of external tools and RAG to expand their capabilities.

Local and efficient models won’t destroy the environment. Like all advancements in computing, it’s about efficiency over brute force. The biggest computing revolution occurred with the proliferation of the microchip, not with the advent of mainframes.

If we focus on efficient, locally runnable models, we will reduce our reliance on massive server clusters and mitigate the carbon footprint.

Conclusion: Embrace the Agent Revolution

Instead of chasing the AI model arms race, a more productive and sustainable approach is to invest in custom AI agents. With the use of tools, RAG, and iterative self-correction, smaller, more efficient models can outperform massive models in many real-world scenarios, particularly in software development and other specialized tasks.

It’s only a matter of time before we see widespread adoption of agents leveraging local LLMs. They will deliver speed, accuracy, privacy and environmental responsibility without the massive price tag. The real future of AI is smart, efficient, and sustainable.

Reach out to Nimblesite on 1300 794 205, send us an email at sales at nimblesite.co, or fill out the contact form to chat about your AI Agent needs.

Jingqing Ruan, YiHong Chen, Z., et al. (2023) TPTU: Task Planning and Tool Usage of Large Language Model-based AI Agents OpenReview.net

McKinsey and Company (2024) What is a context window?

Mirza Bahic. (2024) What is a token in AI and why is it so important? Tech Radar

Lewis, P., Perez, E., et al. (2020). Retrieval-Augmented Generation for Knowledge-Intensive NLP, NeurIPS

Karpukhin, V., et al., Dense Passage Retrieval for Open-Domain Question Answering, EMNLP 2020

Boris Ruf (2024). Navigating the environmental impact of AI, OECD.AI

Markidis, S., Raff, D., et al. (2024). MLPerf Power: Benchmarking the Energy Efficiency of Machine Learning Systems from Microwatts to Megawatts for Sustainable AI. arXiv

Zhu, Y., Wang, L., Liu, J., Xu, H., Liu, Z., et al. (2023). Optimizing Local AI: Hardware Advancements and Feasibility for Advanced Models. arXiv